I’m confused because that discussion is solved, and is the result of a missing initramfs due to DKMS confusion. I don’t see how that can relate to Btrfs because kernel and initramfs are co-located, and even if your setup is non-default by using Btrfs for /boot, if the Btrfs can’t be read then that means neither kernel nor initramfs can be read in which case it’s definitely not the exact same issue. Hence my confusion.

From the first screen shot of btrfs check it thinks /dev/nvme1n1 is not a Btrfs file system. That means the super block is not merely damaged, it’s missing. The magic (signature) is missing.

What’s the result from blkid ? I want to know what libblkid thinks is on nvme1n1p3, is this really the correct device or is it LUKS and it needs to be opened first?

It’s a very very very good idea to not make changes to the file system until we understand the problem. Repairs are always (unfortunately) irreversible and therefore can make things worse and not reversible.

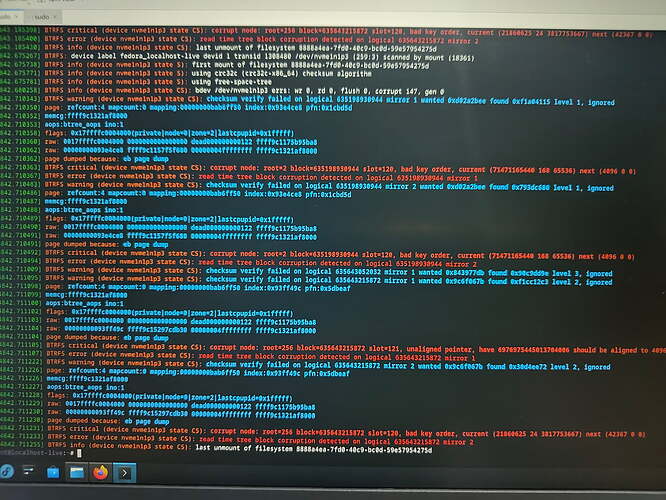

A few screenshots later, there’s a failed mount command. When mount fails and says more information is in dmesg, check it and post all items that occur after the command was issued. I’m guessing the kernel really doesn’t see a Btrfs here at all.

The typical failure mode of flash storage is to return transient zeros or garbage, followed by either persistently returning zeros or garbage - or even sometimes going permanently read only (the device itself, not merely the file system) while showing some earlier state of the data on the device. The latter state is obviously pretty ideal even if the behavior is unexpected and not immediately obvious (the first time I experienced it the device was read-only for some very long period before I was able to infer that this is what had happened).

Are any other partitions on this drive working? Or is this the only partition that is not working?

What do you get for:

btrfs rescue -v super-recover /dev/nvme1n1p3

btrfs inspect dump-s -fa /dev/nvme1n1p3

If the second command doesn’t work, then adding -F might give a clue, but note that forcing the display of what’s at the locations for superblocks could reveal private/secret information. LIke, we don’t know what happened before the problem, so it’s all speculative at the moment. But if there aren’t super blocks where they should be then those locations have been overwritten somehow - but with what? That can give a clue but it’s in the vicinity of 99.9999% user error because the kernel just isn’t going to overwrite file system super blocks, there’s safeguards against that.