Dear Chris @chrismurphy ,

thank you for your prompt follow-up. Here are the outputs of the commands through fpaste:

btrfs check: UNTITLED - Pastebin Service / see also output below.

btrfs insp dump-t only returns an error:

ERROR: tree block bytenr 12714019888096 is not aligned to sectorsize 4096

The point is, that the btrfs check command returns a significant amount of errors to stderr output, which is not included in the fpast. Redirecting this to stdout and using fpaste, returns an error, that the size is too big for fpaste (error: “no file hosting service“).

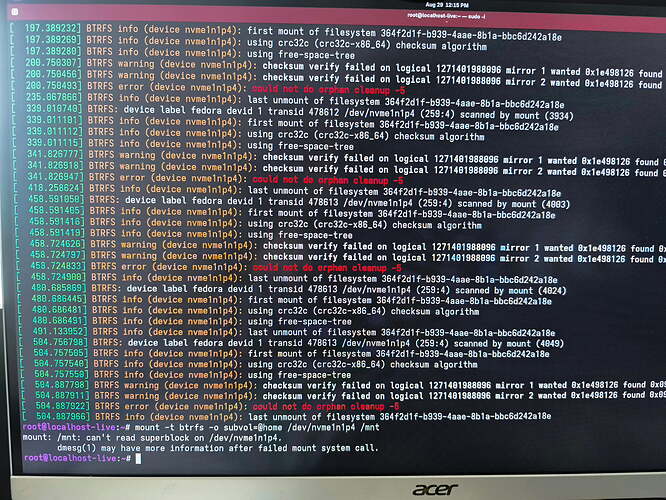

I try to redirect to a file and show the output here:

root@localhost-live:~# btrfs check /dev/nvme0n1p4 2>btrfs_check.error.log | tee btrfs_check.log

Opening filesystem to check…

Checking filesystem on /dev/nvme0n1p4

UUID: 364f2d1f-b939-4aae-8b1a-bbc6d242a18e

The following tree block(s) is corrupted in tree 256:

tree block bytenr: 493174784, level: 1, node key: (14696013, 1, 0)

found 1009981825024 bytes used, error(s) found

total csum bytes: 882381192

total tree bytes: 8690319360

total fs tree bytes: 7226294272

total extent tree bytes: 419790848

btree space waste bytes: 1710313252

file data blocks allocated: 2037460811776

referenced 1603884122112

The error log contains the following (shortened):

[1/8] checking log skipped (none written)

[2/8] checking root items

[3/8] checking extents

checksum verify failed on 1271401988096 wanted 0x1e498126 found 0x09cacbe1

checksum verify failed on 1271401988096 wanted 0x1e498126 found 0x09cacbe1

checksum verify failed on 1271401988096 wanted 0x1e498126 found 0x09cacbe1

Csum didn’t match

ref mismatch on [16531456 4096] extent item 1, found 0

data extent[16531456, 4096] bytenr mimsmatch, extent item bytenr 16531456 file item bytenr 0

data extent[16531456, 4096] referencer count mismatch (root 256 owner 14696018 offset 1388544) wanted 1 have 0

backpointer mismatch on [16531456 4096]

owner ref check failed [16531456 4096]

ref mismatch on [21241856 4096] extent item 1, found 0

data extent[21241856, 4096] bytenr mimsmatch, extent item bytenr 21241856 file item bytenr 0

data extent[21241856, 4096] referencer count mismatch (root 256 owner 14696018 offset 57344) wanted 1 have 0

backpointer mismatch on [21241856 4096]

owner ref check failed [21241856 4096]

ref mismatch on [1112039424 4096] extent item 1, found 0

data extent[1112039424, 4096] bytenr mimsmatch, extent item bytenr 1112039424 file item bytenr 0

data extent[1112039424, 4096] referencer count mismatch (root 256 owner 14696018 offset 270336) wanted 1 have 0

backpointer mismatch on [1112039424 4096]

owner ref check failed [1112039424 4096]

ref mismatch on [1115729920 4096] extent item 1, found 0

data extent[1115729920, 4096] bytenr mimsmatch, extent item bytenr 1115729920 file item bytenr 0

…. shortened ….

root 256 inode 14747577 errors 2001, no inode item, link count wrong

unresolved ref dir 613 index 28793 namelen 64 name c578ea83c97fc8fae3b8014d6f76080b13a42b65-app.zen_browser.zen.png filetype 1 errors 4, no inode ref

root 256 inode 14747578 errors 2001, no inode item, link count wrong

unresolved ref dir 613 index 28794 namelen 68 name c863804b04b425df0bf5ed2ea1a5e845a5cf0c8e-app.opencomic.OpenComic.png filetype 1 errors 4, no inode ref

root 256 inode 14747579 errors 2001, no inode item, link count wrong

unresolved ref dir 272 index 125488 namelen 4 name user filetype 1 errors 4, no inode ref

root 256 inode 14747580 errors 2001, no inode item, link count wrong

unresolved ref dir 295 index 53372 namelen 27 name session-active-history.json filetype 1 errors 4, no inode ref

root 256 inode 14747581 errors 2001, no inode item, link count wrong

unresolved ref dir 98912 index 3662 namelen 11 name soup.cache2 filetype 1 errors 4, no inode ref

root 256 inode 14747582 errors 2001, no inode item, link count wrong

unresolved ref dir 295 index 53374 namelen 12 name session.gvdb filetype 1 errors 4, no inode ref

ERROR: errors found in fs roots

(Please note, that the device numbering changes in different boot environments, e.g. with the live system. But these should be the correct device files and renaming does not matter imo.)

Does this help analyzing the problem? - Any hint is very welcome!

What is the status right now:

- I had restored an (too old) snapshot of @home and have put it in the place, renaming the current damaged subvolume to @home-corrupted.

- The system boots again with the outdated @home in place

- I have updated the kernel from 6.15.10 to the latest kernel 6.16.x, hoping that a potential bug in @home-corrupted has been removed.

- @home-corrupted mounts in the booted system. Trying to copy files from this corrupted file system only shows 2 broken files. One logfile of Nextcloud and a cache file of Gnome Evolution. Both not being important.

- Current problem is: I cannot delete those files, due to an error message. Checksums do not fit. Nor can I eliminate the file system error.

- The device now holds mainly: an updated @root file system, @home-corrupted and working, but outdated @home partition. But the filesystem contains errors, which I do not feel comfortable with on a production system!

Thus my question is: Is there a way to remove those errors and to repair the device (e.g. through a sort of fscheck)?

Or do i need to go through the full cycle of backup reformatting and restoring?

Fixing the filesystem would allow for a much faster and easier process…

Thank you in advance & best regards

Thomas